In general, technology and their producers are perceived widely as competent authorities. Even if serious breaches of the law and security problems appear, this does not necessarily lead to a questioning of their authority. Around 350,000 clients of one big producer of cardiac pacemakers needed to get an update in 2017 (US-FDA, 2017/08/29)and yet no one remembers this in 2020, even those with a cardiac pacemaker, and thus directly impacted.

Contents

Trust

This example illustrates that our concerns are not always related to the risks. The reason is trust. Instead of broad panic, the public seems to trust the producers of special devices and health institutions implanting and monitoring them. Why are they more trustworthy than others? One partial answer is that humans do not act rationally in the definition of a rationalist idea of man.

One characteristic of trust is confidence and belief in the good intentions of the people and institutions we trust. This allows us to reduce complexity and not to run away scared when potential risks emerge. An experiment of the Georgia Institute for Technology highlights this. They developed an Emergency Evacuation Robot aimed to guide students out of their dorms in case of emergencies. Sometimes it led them on the correct path and sometimes on a strange route out of the building. The latter case – for instance, if the robot was driving past the emergency exit – did not generally lead to distrust or disobedience of the students: “Eighty-one percent of participants indicated that their decision to follow the robot meant they trusted the robot” (Robinette et al., 2016, p. 4). They followed the machine because it had a good purpose inscribed: “many participants wrote that they followed the robot specifically because it stated it was an emergency guide robot on its sign” (Robinette et al., 2016). One might add, certainly it is not only about the purpose but also about the institutions pretending to follow the purpose and hereby warranting the robot’s trustworthiness.

A study of the EU’s Fundamental Rights Agency has shown that a majority of people feel comfortable or very comfortable with biometric surveillance technology in public spaces for the purpose of security. The motivations behind that trust were that they use the technology ethically and that the result was increased security (EU-FRA, 2019, p. 18ff.). Police forces are still trusted institutions in Europe.

Trust Shifts to Fear

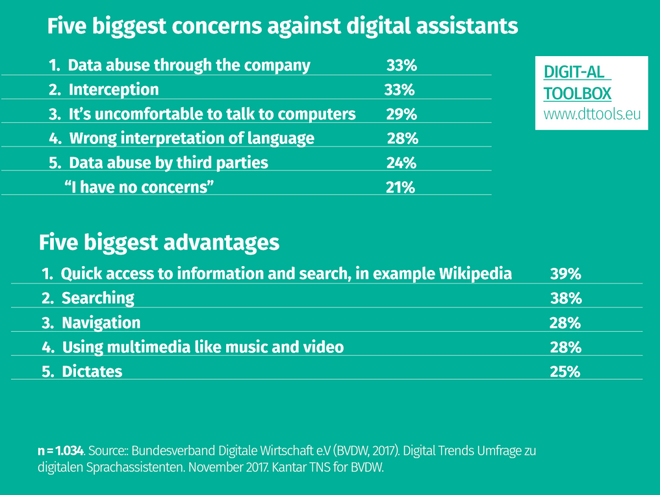

In times where people don’t know if they should marvel at digitalisation or be scared of it, this must be perceived also as a relevant product design decision - trying to prevent to give opportunities for fear. Beside curiosity, people also inherit a critical attitude that might shift to anthropomorphism phobia, a fear of technology becoming too human-like or of the human becoming too technology-like.

Humans would humanize machines as long as it is clear that they are only machines (van Mensvoort, 2017, p. 175ff.). Eric Schmidt (Google) used the term creepy line to explain how big data platforms and IT companies respond to the danger that people could consider them as going too far: “Google policy about a lot of these things is to get right up to the creepy line but not cross it. Implanting things in your brain is beyond the creepy line. At least for the moment until the technology gets better” (Schmidt, 2010).

Eric Schmidt about the "creepy line" (from 14:10)

Eric Schmidt represents here a management perspective on aspects related to the digital self. He understands the concept of a creepy line not as an ethical problem, rather as one of habituation. This perspective is obviously fundamentally different to the majority of people which are on one hand fascinated from the opportunities technology opens up, but on the other hand constantly concerned about its impact on their digital self.

Preventing Humanisation

An interesting aspect of digitalisation according to Schmidt’s dogma is that exactly the most common “intelligent” or “smart” devices in our surroundings are trying not to evoke associations with humanoids or robots. Amazon’s assistant Alexa and Apple’s Siri were consciously named inline with this idea. Their only human touch is their voice. Loidean and Adams pointed out the problematic choices of the digital assistants’ designers in regard to the representation of female – serving and helpful with mystical names – in consequence perpetuating discriminating gender images: “These communications are delivered by witty and flirtatious characters revealed through programmed responses to even the most perverse questions” (Loidean & Adams, 2019, p. 2).

Distance to Human Brains

In particular, when we consider technology at the interface of thinking, concerns about technology’s ability to think is the creepy line for many people. The brain seems to hold a specific meaning for the individuality of people. Cogito, ergo sum. Brain pacemakers are so far tolerated as they serve therapeutic purposes and enable people to participate. They would be less accepted if they served other purposes like learning or the “programming” of people’s brains. We must remember the ethically questionable and dangerous experiments from the 1950s where electricity and lobotomies were used in order to delete criminal dispositions or to “reprogram” homosexual people. Similar skepticism is growing in regard to the tracking and analysis of feelings and emotions, because we are convinced that the key features which distinguish humans from machines are the ability and right to think independently, and they might feel free if they are allowed to feel freely.

Education: Reflection of Our Creepy Lines

What is perceived as ‘too human-like’ is subject to habituation. It might change over the course of time. The author, Koert van Mensvoort, purported that our fears might be used constructively if we registered them more consciously. On the one hand, we should try to prevent anthropomorphism phobia by eliminating its rationale. On the other hand, it might be a guideline or indicator for how much technology people find acceptable or comfortable in a given context. Of course, this also applies accordingly to the other concerns and fears related to digitalisation.

What are our Creepy Lines?

Our fears in regard to new developments – are a natural reminder not to go too far not to cross our creepy line. In this sense, they are helpful signals inducing us to reflect our needs and goals.On the other hand, critical thinking involves a reflection of these concerns and fears and their intellectual foundation.

What is acceptable? What is beyond the creepy line?

- Monitoring of the private space

- Processing of private data and sharing it with third state or other third parties

- Data analysis on the basis of individual profiles

- Non-invasive human-machine interaction

- Implants, prothesis

- Performance tracking through others (employer, partner, doctor, ...)

Nils-Eyk Zimmermann

Editor of Competendo. He writes and works on the topics: active citizenship, civil society, digital transformation, non-formal and lifelong learning, capacity building. Coordinator of European projects, in example DIGIT-AL Digital Transformation in Adult Learning for Active Citizenship, DARE network.

Blogs here: Blog: Civil Resilience.

Email: nils.zimmermann@dare-network.eu

References

European Union Agency for Fundamental Rights (EU-FRA 2019). Facial recognition technology: fundamental rights considerations in the context of law enforcement; Luxembourg: Publications Office of the European Union. https://doi.org/10.2811/52597

Loideain, N; Adams, R (2020). From Alexa to Siri and the GDPR: The gendering of Virtual Personal Assistants and the role of Data Protection Impact Assessments; Computer Law & Security Review, Volume 36. https://doi.org/10.1016/j.clsr.2019.105366

Otto, P.; Gräf, E. (2017). 3TH1CS — A reinvention of ethics in the digital age? Berlin 2017.

Robinette, P.; Wenchen L., Allen, R; Howard, A. M.; Wagner, A. R.: Overtrust of Robots in Emergency Evacuation Scenarios, (2016). ACM/IEEE International Conference on Human-Robot Interaction) (HRI 2016). https://www.cc.gatech.edu/~alanwags/pubs/Robinette-HRI-2016.pdf

Eric Schmidt (2010). At the Washington Ideas Forum in Washington, D.C. on October 1, 2010. https://www.youtube.com/watch?v=CeQsPSaitL0 (from 14:10)

US Food and Drug Association (US-FDA 2017/08/29). Firmware Update to Address Cybersecurity Vulnerabilities Identified in Abbott‘s (formerly St. Jude Medical‘s) Implantable Cardiac Pacemakers: FDA Safety Communication. https://www.fda.gov/medical-devices/safety-communications/firmware-update-address-cybersecurity-vulnerabilities-identified-abbotts-formerly-st-jude-medicals

van Mensvoort, K. (2017). Antropomorphismus-Phobie. In Otto, Gräf (2017)

The Digital Self

This text was published in the frame of the project DIGIT-AL - Digital Transformation Adult Learning for Active Citizenship.

Zimmermann, N. with Martínez, R. and Rapetti, E.: The Digital Self (2020). Part of the reader: Smart City, Smart Teaching: Understanding Digital Transformation in Teaching and Learning. DARE Blue Lines, Democracy and Human Rights Education in Europe, Brussels 2020.