Fake News

The term fake news in itself is not new at all – in an 1894 illustration by Frederick Burr Opper (Opper 1894), a reporter is seen running to bring them to the desk – but it became ubiquitous (Farkas & Schou, 2018) during the 2016 US presidential elections, being used by liberals against right-wing media and, notably, by then-candidate Donald Trump against critical news outlets.

The term has been variably used to refer to more or less every form of problematic, false, misleading, or partisan content (Tandoc, Lim & Ling, 2018). It has thus been criticised for its lack of “definitional rigour” and for having been „appropriated by politicians around the world to describe news organisations whose coverage they find disagreeable“ (Wardle and Derakhshan, 2017). A Handbook by UNESCO (Ireton and Posetti, 2018) even put a strikethrough on it in its cover.

Information disorder

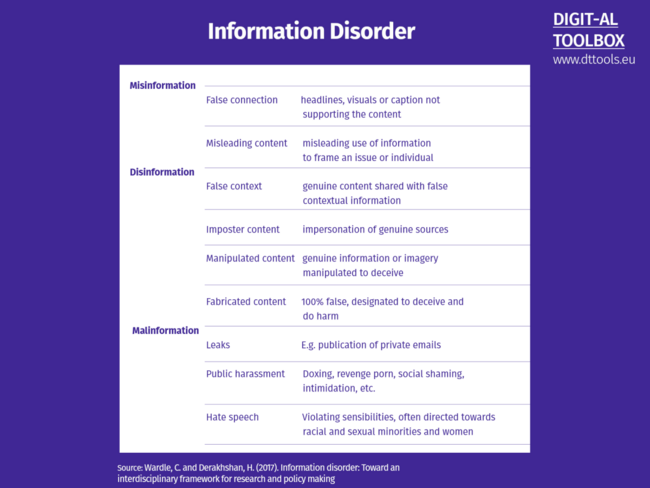

The concept of information disorder, first proposed by Claire Wardle and Hossein Derakhshan in a 2017 report for the Council of Europe, includes disinformation, misinformation and malinformation. We do not use the term fake news, that we discuss in a separate box. In each of these macro-categories we can find different subcategories. Here are the most important. Some of the following definitions are also from another work by Claire Wardle (2017). Alice Marvick and Rebecca Lewis (2017) discuss malinformation in more detail.

Disinformation, Misinformation, Malinformation?

Disinformation: False information shared to cause harm

Misinformation: False information shared without meaning any harm

Malinformation: Genuine information shared to cause harm.

Information Disorder

The imbalance of information in a media environment, caused by Dis- Mal- and Misinformation.

Malinformation, including leaks, harassment and hate speech, is a slightly different issue from the others. Satire and parody are not included in the table, but they have the potential to fool, and a website with fabricated content might claim itself as a satire website to defend itself.

Some content may fall into more than one category, and in some cases, we may not be able to categorize something with full certainty, for example because we do not know the motivations. We may also legitimately not agree with these specific definitions. Still, what is most important is that not all false news is created equal: we must understand the complexity of the information environment, and a conceptual framework may help us in doing that. When one says “fake news” (a term we prefer not to use, see box) he or she might be thinking of “fabricated content”, news that is completely false: this is just one of our categories, and may not be the biggest problem.

In October 2019, Claire Wardle noted that since 2016 there has been an increased “weaponization of context”, using warped and reframed genuine content, which is better than fabricated content to persuade people and is less likely to be picked up by social networks’ AI systems that are part of fact checking efforts.

This seemed to be confirmed during the COVID-19 pandemic and consequent “infodemic”, defined by the World Health Organization (2000) as “an over-abundance of information”: The Reuters Institute for the Study of Journalism found that 59% of dis- and misinformation was “reconfigured” (false context, misleading or manipulated content), while only 38% was fabricated (Brennen, Simon, Howard and Nielsen, 2020).

The Dangers

Information disorder poses many dangers (Wardle and Derakhshan, 2017). In 2016, a man opened fire in a restaurant and pizzeria in Washington, D.C., looking for a basement in which children were supposedly held prisoner. There were no children, not even a basement; the belief was part of a conspiracy theory, known as Pizzagate (Pizzagate, 2017). Climate-related conspiracy theories pose a threat to the environment and medical misinformation poses a threat to health, and can even lead to riots, as happened in Novi Sanzhary, a small town in Ukraine because of the fear that people with coronavirus were going to be brought there (Miller, 2020).

News is one of the raw materials of good citizenship, as „The healthy functioning of liberal democracies has long been said to rely upon citizens whose role is to learn about the social and political world, exchange information and opinions with fellow citizens, arrive at considered judgments about public affairs, and put these judgments into action as political behavior“ (Chadwick, Vaccari, & O’Loughlin, 2018). Information is “as vital to the healthy functioning of communities as clean air, safe streets, good schools, and public health” (Knight Commission, 2009). Dis- and misinformation pollute the information ecosystem and have “real and negative effects on the public consumption of news”. Distrust can become a self-perpetuating phenomenon: „Groups that are already cynical of the media — trolls, ideologues, and conspiracy theorists — are often the ones drawn to manipulating it. If they are able to successfully use the media to cover a story or push an agenda, it undermines the media’s credibility on other issues“ (Marvick & Lewis, 2017). In the long term, this is a risk for democracy (DCMS, 2018).

Disinformation accusations can also become a weapon in the hand of authoritarian regimes: world leaders use them to attack the media (The Expression Agenda Report 2017/2018)and in 2019, 12% of journalists imprisoned for their work were detained on false news charges (Beiser, 2019).

The ‘Assembly Line’

Some viral (and false) news stories during the 2016 US elections were created by people in the small town of Veles in Macedonia. Their biggest hit was an article titled „Pope Francis Shocks World, Endorses Donald Trump for President“, which was of course entirely false (Silverman & Singer-Vine, 2016). They claim they did it only for economic reasons to make money from the ads (Subramanian, 2017). But this is just one case, and the “assembly line” of dis- and misinformation can take different forms.

Wardle and Derakhshan (2017) identify three elements (the agent; the message; the interpreter) and three phases (creation; production; distribution) of “information disorder”.

The agents could be official actors (i.e. intelligence services, political parties, news organisations, PR firms or lobbying groups) or unofficial actors (groups of citizens that have become evangelised about an issue) who are politically or economically motivated. Social (the desire to be connected with a certain group) and psychological reasons can also play a role.

The agent who creates and conceives the idea on which the content is based is often different from the one who practically produces it and the one that distributes and reproduces it. Once a message has been created, it can be reproduced and distributed endlessly by many different agents all with different motivations. Interpreters may become agents themselves: a social media post shared by several communities could be picked up and reproduced by the mainstream media and further distributed to other communities.

The same piece of information might be originally born as satire, or even as real news, and then become misinformation in the eye of different interpreters or in the hands of different agents. When Notre Dame caught fire in 2019, an article documenting that a gas tank and some Arabic documents were found near the Cathedral emerged: although the article was real, it was from three years prior in 2016, thus becoming a case of false context (Bainier & Capron, 2019).

Russian Trolls and the Usual Suspects

In recent years there has been a lot of talk about “Russian trolls”, as if they were the main, if not the only, agents responsible for the existence of the information disorder. In this paragraph we will see who they are, what they are responsible for, and if there is any other explanation for what is happening.

Troll

a real person who “intentionally initiates online conflict or offends other users to distract and sow divisions by posting inflammatory or off-topic posts in an online community or a social network. Their goal is to provoke others into an emotional response and derail discussions” (Barojan, 2018).

Trolling itself is as old as online forums, but “Russian trolls” refers to a slightly different phenomenon. While normal trolls do what they do for fun (a strange kind of fun, called the “lulz” in jargon), it has been demonstrated that the Internet Research Agency (IRA), based in Saint Petersburg and sometimes called the “Russian troll factory”, contracted people to influence public opinion abroad for the Russian state (MacFarquhar, 2018). The IRA is probably owned by Yevgeny Prigozhin, an oligarch linked with Russian president Vladimir Putin. Inside Russia, the IRA also has the primaryfunction of making meaningful discussion amongst civil society impossible, a practice labelled “neutrollization” (Kurowska & Reshetnikov, 2018).

While most of their activity is in Russia, it was notably revealed that they also interfered with the 2016 U.S. elections (Linvill & Warren, 2018) in what can be considered an information operation, a deliberate and systematic attempt by unidentified actors “to influence public opinion by spreading inaccurate information with puppet accounts” (Jack, 2018).

It has been argued that this operation was not aimed much at convincing someone of something, but more at spreading uncertainty, sowing mistrust and confusion – a purpose that is typical of many disinformation campaigns (Wardle & Derakhshan, 2017). For their purpose, Russian trolls have also made use of bots(automated social media accounts run by algorithms) and botnets(network of bots) (Barojan, 2018). Russia is not the only country that has been involved with this kind of information operation: in 2019 Twitter explicitly accused China of an information operation directed at Hong Kong (Twitter Safety, 2019). Countries can also indulge with information campaigns that are different from information operations, because while their content might be true or false, their author is not hidden.

However, foreign countries are not the only cause of the information disorder (Gunitsky, 2020). Others, including social media and phenomena related to social media, have also been accused.

Algorithms and Other Suspects

Search algorithms decide why a certain link appears in the first page of a search engine’s results (Google’s is called PageRank). Social media algorithms also decide what you see. On your Facebook newsfeed you might see a link to a junk news website, a mainstream newspaper or a photo of your nephew depending on what the algorithm prioritizes. The algorithm behind suggested videos on YouTube has been accused of amplifying junk content, misinformation, conspiracy theories, etc. (Carmichael & Gragnani, 2019).

The serious problem with algorithms is that they are often not transparent: we don’t know how they work. So while it seems unlikely that Google and Facebook are actively and consciously promoting disinformation, it may well be that their algorithms accidentally favour such content, for example because it gets high engagement and thus is likely to get more clicks and generate more revenues for the platforms (Bradshaw & Howard, 2018).

When you see a physical ad, for example on a billboard, everyone else can also see it. On the web this works differently: on Facebook and elsewhere, you can make your ads only visible to your target, using different elements, such as location, age, etc. This is called microtargeting and it is commonly used for commercial ads. However, this can become problematic with political ads. If a politician makes a false claim on a physical billboard or on TV, journalists would be able to debunk it. If instead he makes it in a microtargeted ad, it would be more difficult to identify and correct. As a result, we use the term dark ads.

The problem, however, has been partially resolved in recent years. In 2019, to respond to the harsh criticism received, Facebook launched an Ad Library in which all political ads that have appeared on the platform are available (Constine, 2019). Additionally, you can see who paid for the ad. Of course, these systems can be tricked, as you might be able to make a political ad which is not recognised as such by Facebook or to pay through a dummy person or organization.

Individuals play an important role in exercising their information preferences on the internet. Some academic studies have demonstrated that people are more likely to share information that conforms to their pre-existing beliefs with their social networks, deepening ideological differences between individuals and groups. It has thus been argued that social media creates ideological segregation leading to the creation of “echo chambers”. The term is a metaphor to describe the situation where a person interacts primarily within a group of people that share the same interests or political views (Dubois & Blank, 2018). A somewhat related concept is “filter bubbles”, a term coined by internet activist Eli Pariser (What is a Filter Bubble, 2018) to refer to a selective information acquisition by website algorithms, including search engines and social media posts. This may also help the circulation of fake news. However, among scholars there is no full consensus on how these phenomenon operate over the internet (Flaxman, Goel & Rao, 2016), and some studies argue that the danger is non-existent or overstated (Fletcher & Nielsen, 2018).

In “Network Propaganda”, a comprehensive study of media coverage of the 2016 U.S. presidential elections published in October 2018, Yochai Benkler, Robert Faris, and Hal Roberts argue that the cause of the current situation are not the usual suspects (social media, Russian propaganda and fake news websites), but a longstanding change of the right-wing media ecosystem (e.g. Fox News), that has abandoned journalist norms creating a propaganda feedback loop.

While they focus only on the American case, their method and approach could be used to analyse the mainstream media ecosystem in other countries, and many insights may be similarly valid. Interestingly, a study in the UK examined the role of “traditional” British tabloids, and noticed that the more the users share tabloid news on social media, the more likely they are to engage in sharing exaggerated or fabricated news (Chadwick, Vaccari, & O’Loughlin, 2018).

Any solution?

As the problems behind the information disorder are many, and not all of them are clear, it is obvious that, unfortunately, there is no silver bullet. In particular, solutions aimed at fighting foreign disinformation cannot be sufficient, even if they were able to nullify it completely, which is unlikely.

One part of the solution may be fact-checking. The term usually refers to internal verification processes that journalists put their own work through, but fact-checkers (or debunkers) dealing with disinformation are involved in ex-post fact-checking, verifying news by other media and publishing the results. Facts alone, however, are not enough to combat disinformation (Silverman, 2015), as it may continue to shape people’s attitudes even when debunked (Thorson, 2016). Additionally, people who see the fact-check are often not those who saw the incorrect news, but people who did not see the incorrect news at all or who saw it but recognised or suspected that it was false to begin with.

Excluding advertising from false news websites may be helpful against those with only an economic motivation. However, as we have seen, not all creators of disinformation have an economic motivation. Moreover this practice may be problematic as this can lead to a form of economic censorship controlled by corporations.

Media literacy is widely acknowledged as a key mitigation factor for disinformation, as it teaches people how to recognise it. It “cannot [...] be limited to young people but needs to encompass adults as well as teachers and media professionals” in order to help them keep pace with digital technologies (HLEG, 2018). Clearly, this is not a fast solution. Organisations like UNESCO and the European Union (particularly with the DigComp) have been active in improving media literacy.

The role of mainstream media is fundamental in many ways. First of all they should keep a high standard of quality: if they themselves are a source of misinformation with exaggerations, clickbait, etc. they are part of the problem, not of the solution. If they are able to do so, and to recover the trust they have lost, people would not search for news in other, less trustworthy places. Some minor tricks might also be helpful, such as making the date of the article visible in the image preview on social networks to avoid them being used in a false context. But more could be done: transparency on (non-confidential) sources and on methods can help the public learn how good journalism works and to distinguish it from bad examples.

An alternative approach: Constructive Journalism

Conclusions for Education

There are many high-quality approaches that have been developed to work with youth on the topics of hate speech and disinformation. They range from week-long workshop concepts to short courses adaptable to be used in the classroom.

Especially in social media work with young people, there are high-quality concepts that cover online and offline, from developing capacities for media use and production to the critical reflection and consumption of media as well as observing the mediascape from a perspective of consumerism. They are competency-centred and address knowledge, skills, attitudes and values in a holistic way.

These approaches need to be transferred into lifelong learning contexts and thanks to well-developed already existing methodologies, they might easily be adapted to the needs of adult learners.

In particular in the fields ofEducation for Democratic Citizenship and Human Rights (EDC/HRE) these concepts integrate the three basic dimensions of digitalisation and civic education in a positive and enabling way:

- To understand how digitalisation is shaping people and societies, including its impact on youth work and Adult Education

- To be able to take people’s digital cultures into account in LLL-practices

- To be able to encourage people to shape the process of digitalisation

However, there seem to be few pedagogical concepts that also contextualize these phenomena to a media and media market analysis in our countries. It is of considerable importance to connect these aspects to the perspectives of democracy, formation of opinion and human rights.

Game-based Learning on Information Disorder

Play online:

Are you you?

Interactive documentary and challenge: Beat face recognition systems by Tijmen Schep and Sherpa project

OnlineBad News

Online game - From fake news to chaos!

play onlineDisinformation Diaries

Learn how disinformation interferes with elections

play onlineFake it to make it

A game about fake news

play onlineOnline test: filter bubble

interactive introduction by Zentrum fir politesch Bildung (ZpB) Luxemburg

OnlineHarmony Square

Game about fake news

play onlineHow normal am I?

How normal am I? By Tijmen Schep. play onlineTest: Human vs. AI

Can We Tell the Difference Anymore? play onlineYour Data Mirror

Learn about the mechanisms of data collection and the impact this practice on society. By Interactive Media Foundation.

onlineWeek with Wanda

A game about the impact of Artificial Intelligence

play online

Fazıla Mat and Niccolò Caranti

The article is derived from the Dossier: “Disinformation” published in 2019 by the Resource Centre on Media Freedom in Europe, a website curated by Osservatorio Balcani e Caucaso Transeuropa (OBCT).

References

Bainier, C. & Capron, A. (16 April 2019). How to avoid falling for fake news about the Notre-Dame fire. The Observers.

Brennen, J. S.; Simon, F.; Howard, P. N. & Nielsen, R. K. (7 April 2020). Types, sources, and claims of COVID-19 misinformation. Reuters Institute for the Study of Journalism.

Chadwick, A.; Vaccari, C.; & O’Loughlin, B. (2018). Do tabloids poison the well of social media? Explaining democratically dysfunctional news sharing. New Media & Society, 20(11), 4255–4274. https://doi.org/10.1177/1461444818769689

Digital, Culture, Media and Sport (DCMS) Committee of the House of Commons (July 2018). Disinformation and ‘fake news’: Interim Report, House of Commons.

Ireton, C,; Posetti, J. (2018). Journalism, fake news & disinformation : handbook for journalism education and training. Paris : UNESCO, 2018

Knight Commission on the Information Needs of Communities in a Democracy (Oktober 2009). [https://production.aspeninstitute.org/publications/informing-communities-sustaining-democracy-digital-age Informing Communities: Sustaining Democracy in the Digital Age], Aspen Institute.

Marvick, A. & Lewis R. (2017). Media manipulation and disinformation online, Data & Society.

Miller, C. (9 March 2020). A Small Town Was Torn Apart By Coronavirus Rumors. Buzzfeed News.

Opper, F. B. (1894) The fin de siècle newspaper proprietor / F. Opper., 1894. N.Y.: Published by Keppler & Schwarzmann, March 7. [Photograph] Retrieved from the Library of Congress

Silverman, C. & Singer-Vine, J. (16 December 2016). The True Story Behind The Biggest Fake News Hit Of The Election. Buzzfeed News

Subramanian, S. (15 February 2017). Inside the Macedonian Fake-News Complex. Wired.

Wardle, C. (October 2019). Understanding Information Disorder, First Draft.

Wardle, C. und Derakhshan, H. (2017). Information disorder: Toward an interdisciplinary framework for research and policy making, Council of Europe.

World Health Organization (2020). Novel Coronavirus(2019-nCoV) - Situation Report - 13

The Impact of Digitalisation on Media and Journalism

This text was published in the frame of the project DIGIT-AL - Digital Transformation Adult Learning for Active Citizenship.

Carranti, N & Vivona, V.: The Impact of Digitalisation on Media and Journalism (2020). Part of the reader: Smart City, Smart Teaching: Understanding Digital Transformation in Teaching and Learning. DARE Blue Lines, Democracy and Human Rights Education in Europe, Brussels 2020.