Intelligent machines are an old dream of mankind. In recent years, they have brought us a step forward to machine learning processes. But human intelligence is still unrivalled. In 1955, the Rockefeller Foundation received an ambitious grant application: Ten researchers led by the young mathematician John McCarthy planned to make „significant progress“ in just two months in a field that was given its name in this application: artificial intelligence. Their optimism was convincing, and the hand-picked group spent

the summer of 1956 at Dartmouth College in Hanover, New Hampshire, finding out „how to make machines use language, form abstractions and concepts, solve the kinds of problems now reserved for humans and improve themselves.” To date, there is no binding definition of artificial intelligence, but the capabilities mentioned in McCarthy‘s proposal form the core of what machines should do to deserve this title.

Contents

The Initial Idea behind Artificial Intelligence

"To make machines use language, form abstractions and concepts, solve the kinds of problems now reserved for humans and improve themselves."

John McCarthy, 1955

The Dartmouth conference is now considered the starting point for AI research, and the researchers were already in the midst of it at the time, the only thing the company needed was a catchy name. The neurophysiologist Warren McCulloch and the logician Walter Pitts had already designed the first artificial neural networks in 1943, the computer scientist Allen Newell and the social scientist Herbert Simon presented their program „Logical Theorist“ at the conference, which was able to prove logical theorems. Noam Chomsky worked on his generative grammar, according to which our ability to form ever new sentences is based on an unconsciously remaining system of rules. If one spelled this out, one should not be able to bring machines to use language?

In 1959, Herbert Simon, John Clifford Shaw and Allen Newell presented their General Problem Solver 1, which could play chess, and Towers of Hanoi. In 1966, Joseph Weizenbaum made a name for himself with ELIZA, a dialogue program that mimed a psychologist. He himself was surprised by the success of the rather simple system that reacted to signal words.

Setbacks and New Approaches

Intelligent machines seemed to be within reach of the new discipline in this optimistic phase of departure. But setbacks were not to be expected either. A translation program for English and Russian, which the U.S. Army had wanted during the Cold War, could not be realized, and autonomous tanks could not be developed as quickly as the researchers had promised. At the end of the 1970s and again ten years later, military and government donors concluded that the researchers had promised too much and cut funding massively. These phases went down in history as the AI winter.

In retrospect, we can see more clearly today why the early AI researchers underestimated their project: „The study is to proceed on the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it”, says the very second sentence of the funding application cited above. Such a precise description is still illusory today. After more than 60 years of AI research, we now see much more clearly how little human intelligence has been understood so far. While the first generation of AI researchers had focused on universal problem solvers, the first, more modest expert systems were created in the 1970s: Dialog programs that specialized in a specific field, such as the diagnosis of infections or the analysis of data from mass spectrometers.

For these systems, experts were asked about their approach and tried to reproduce it in a program. But this type of programming, called „symbolic“, covers only that part of human cognition that humans are aware of, that they can spell out. Everything that happens more or less unconsciously is lost in the process. For example, how do you recognize a familiar face in a crowd? And what exactly distinguishes a dog from a cat? This is where the machine learning methods score, which we owe the current boom in AI to: You shake up your fine structure yourself, you don‘t have to spell the world out for yourself.

Machine Learning and a New Boom

The field of machine learning comprises numerous different procedures, the most popular of which is currently deep learning, based on artificial neural networks (ANN). Such ANN are roughly modelled on the neural networks of the brain. Artificial neurons are arranged in layers to form a network. They pick up activation signals and calculate them into an output signal. This process is executed on conventional computers with processors optimised for this purpose. ANN have an input layer, which receives the data – for example the pixel values of an image – followed by a different number of hidden layers in which the calculation takes place, and an output layer which presents the result. The connections between the neurons are weighted, so they can amplify or weaken the signals. ANN are not programmed, but rather trained: They start with random weighting and produce a random result at first, which is then corrected again and again in thousands of training runs until it works reliably. Unlike humans, these systems do not need prior knowledge about possible solutions.

Computing with artificial neural networks also has early precursors: Frank Rosenblatt presented the Perceptron as early as 1958, a system that was able to recognize simple patterns with the help of photocells and neurons simulated with cable connections. It seemed clear to Rosenblatt at the time that the future of information processing would lie in such statistical rather than logical procedures. But the Perceptron often did not work very well. When Marvin Minsky and Seymour Papert explained the limits of this method in book length in 1969, ANN became quiet again. That this method is now experiencing such a boom is due to the fact that better algorithms are now available, such as procedures for multi-layer networks, that there is enough data to train these systems, and computers with sufficient capacity to realize these processes. In addition, they are proving their usefulness in daily use.

One Technology, Many Applications

Systems that work with machine learning now not only play chess and “go”, they also analyse x-rays or images of skin changes for cancer, translate texts and calculate the placement of advertising on the Internet. One of the most promising areas of application is called predictive maintenance: appropriately trained systems recognize when, for example, the operating noise of a machine changes. In this way, they can be maintained before they fail and paralyse production.

The Weak Points

Learning systems find structures in large amounts of data that we would otherwise overlook. However, their hunger for data is also a weakness of these procedures. They can only be used where there is enough current data in the right format to train them. Another problem is the opacity of the learning process: the system provides results but no justification for them. This is problematic when algorithms decide, for example, whether someone gets a credit. In addition, they use data from the past to build models that classify new data – and thus tend to preserve or reinforce existing structures.

A New Winter?

In view of these problems, there are more and more voices prophesying that the current hype will be followed by a phase of disappointment, a new AI winter. Indeed, debates about super-intelligence are likely to raise unrealistic expectations. But AI winters have come about because researchers have had their funding cut. Currently, we are seeing the opposite: national AI funding strategies are springing up, and more and more research centres and chairs are being established. Above all, however, today‘s machine learning methods are already delivering ready-to-use products for industry, commerce, science and the military. All this speaks against a new AI winter break.

But we should take a more realistic view of what is feasible: the current AI systems are specialists. In the complex world in which we move, they will by no means be able to do without human knowledge. Perhaps the future of AI systems lies in hybrid procedures that combine both approaches, symbolic programming and learning.

Illustration: Felix Kumpfe/Atelier Hurra

Manuela Lenzen

Manuela Lenzen holds a PhD in philosophy and is a German science journalist.

Das Gehirn

This article was originally published in German at: https://www.dasgehirn.info/, a project of the Gemeinnützige Hertie-Stiftung, Neurowissenschaftlichen Gesellschaft e.V. in cooperation with ZKM | Zentrum für Kunst und Medien Karlsruhe. Scientific support from Dr. Marc-Oliver Gewaltig

This article is distributed under the terms of the Creative Commons Attribution-Non Commercial 3.0 License, which permits non-commercial use, reproduction and distribution of the work.

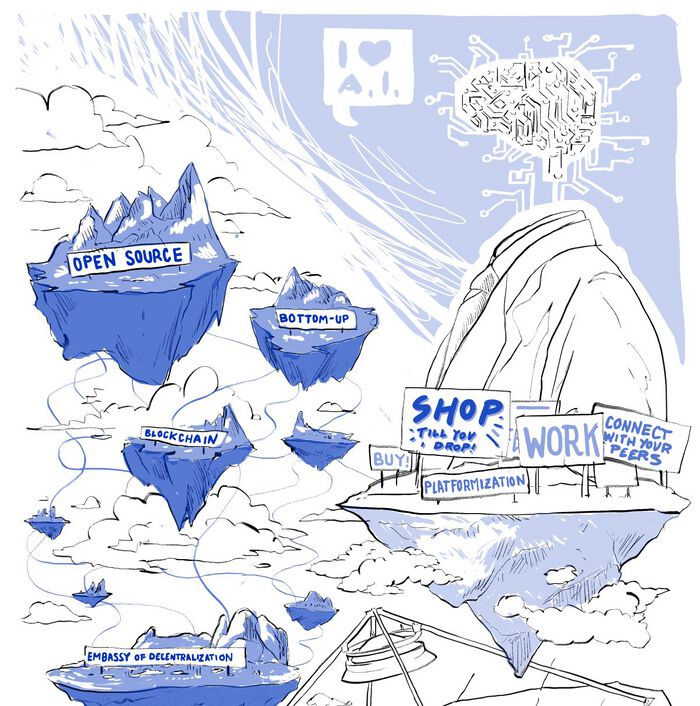

The Internet, Big Data & Platforms

This text was published in the frame of the project DIGIT-AL - Digital Transformation Adult Learning for Active Citizenship.

Zimmermann, N.: The Internet, Big Data & Platforms (2020). Part of the reader: Smart City, Smart Teaching: Understanding Digital Transformation in Teaching and Learning. With guest contributions of Viktor Mayer-Schönberger, Manuela Lenzen, Irights.Lab and José van Dijck and contributions of Elisa Rapetti and Marco Oberosler. DARE Blue Lines, Democracy and Human Rights Education in Europe, Brussels 2020.